A Comprehensive Exploration of Types of Reinforcement Learning Techniques

A Comprehensive Exploration of Types of Reinforcement Learning Techniques

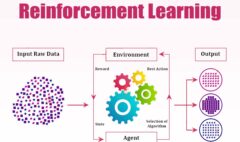

Reinforcement learning (RL) is a branch of machine learning that enables agents to learn optimal behavior by interacting with an environment. It involves learning to make sequences of decisions to achieve specific goals. RL has gained immense popularity due to its ability to solve complex problems and has various types that cater to different learning scenarios. This article aims to provide a detailed overview of the diverse types of reinforcement learning techniques, their characteristics, and their applications.

1) Model-Based Reinforcement Learning

Model-based RL involves learning a model of the environment’s dynamics, enabling agents to plan and make decisions based on the acquired model. This approach involves two main components: model learning and planning. The model learning phase involves creating a representation of how the environment behaves, while the planning phase uses this model to make decisions and choose actions that lead to desired outcomes.

Applications:

- Robotics: Model-based RL helps robots simulate different actions and predict their outcomes, aiding in tasks such as navigation and manipulation.

- Games: Planning-based approaches are employed in game-playing agents to simulate future states and make strategic decisions.

2) Model-Free Reinforcement Learning

Contrary to model-based RL, model-free RL does not require explicit knowledge of the environment’s dynamics. Instead, it focuses on learning directly from interactions by evaluating the quality of actions taken in different states. Model-free methods typically involve learning value functions or policies without explicitly modeling the environment.

Types of Model-Free RL:

- Value-Based Methods: These algorithms learn to estimate the value of states or state-action pairs. Techniques like Q-learning and Deep Q Networks (DQN) fall into this category, where agents learn the values associated with actions in specific states.

- Policy-Based Methods: Policy-based methods directly learn the optimal policy, which defines the action to take in each state. Algorithms like REINFORCE and Proximal Policy Optimization (PPO) belong to this category.

Applications:

- Autonomous Vehicles: Model-free RL enables vehicles to learn optimal driving strategies based on real-time interactions with the environment.

- Finance: Reinforcement learning is applied in algorithmic trading to learn optimal strategies for investment decisions.

3) Actor-Critic Reinforcement Learning

Actor-critic methods combine elements of both value-based and policy-based RL. They involve two main components: an actor that learns the policy and a critic that evaluates actions and provides feedback to the actor. The actor generates actions based on the policy, while the critic evaluates these actions and guides the learning process by providing feedback on their quality.

Applications:

- Robotics: Actor-critic methods are utilized in robotic control tasks, allowing agents to learn complex motor skills and manipulate objects effectively.

- Natural Language Processing: These techniques are applied in language generation tasks, such as dialogue systems and text generation.

4) Temporal Difference Methods

Temporal difference (TD) learning methods update value functions based on the difference between predicted and actual outcomes. TD algorithms utilize the concept of bootstrapping, where the value estimates are updated using estimates from subsequent time steps, enabling faster learning.

Applications:

- Recommendation Systems: TD methods are used in recommendation systems to predict user preferences and provide personalized recommendations.

- Healthcare: RL techniques with temporal difference learning are employed in healthcare settings for optimizing treatment plans and resource allocation.

5) Off-Policy Reinforcement Learning

Off-policy RL methods involve learning from a different policy than the one being executed. These techniques learn from historical data or experiences generated by a different behavior policy while aiming to improve a target policy. Off-policy algorithms enable better utilization of past experiences, increasing sample efficiency and allowing learning from diverse data sources.

Applications:

- Marketing and Advertising: Off-policy RL is utilized in optimizing advertising strategies by learning from historical data to improve future marketing campaigns.

- Personalized Content Delivery: These methods aid in delivering personalized content by learning from diverse user behaviors and preferences.

Conclusion

Reinforcement learning encompasses a diverse range of techniques, each with its strengths and applications across various domains. From model-based and model-free approaches to actor-critic and temporal difference methods, the evolution of RL continues to drive advancements in artificial intelligence and machine learning. Understanding these different types of reinforcement learning techniques enables researchers and practitioners to apply suitable methods to solve complex problems across industries, paving the way for innovative solutions and intelligent systems.